This is a guide on how to setup a splunk server and CDC(Cloud Data Connector) and have CSP send logs to splunk.

This tutorial will discuss the following:

Section 1: How to create an On-Prem Host and enable application CDC from the CSP portal. This section will also describe how to deploy a BloxOne On-Host on Docker and join it to the CSP portal.

Section 2: How to setup an external Splunk server on Docker.

Section 3: How to forward data from CSP to the external Splunk Server. This section will cover how to get DNS query and response data from CSP portal to an external Splunk server.

Section 4: Understanding the data flow.

Disclaimer: This guide is intended to test CDC in a local lab environment for testing purposes. The free Splunk version also has a limit on how much data it can index. You may want to get a license from Splunk if you plan to index more data.

If you are looking to configure it in production, reach out to your local Infoblox Systems engineer/Accounts team to deploy a production ready deployment as per your requirements.

Lab Requirements:

-Access to the CSP Portal to configure CSP.

- 2 VMs (Ubuntu 16.04.x) with Docker installed with internet connectivity.

-One of the VMs will run BloxOne Cloud Data Connector and the other one will be running a Splunk server.

-In this lab setup, I have named the CDC VM with Hostname: cdc.infobloxlab.com and the external splunk server’s hostname as “splunk.infobloxlab.com”.

Below are the details of the VMs used in this tutorial: (Both the VMs are in the same subnet)

| VM |

Hostname |

Description |

System Details |

IP Address |

| VM1 |

cdc.infoblox.com |

Runs CDC |

4 vCPU, 8GB, 64GB Disk |

10.0.48.87 |

| VM2 |

splunk.infobloxlab.com |

Runs Splunk Server |

4 vCPU, 16GB, 120GB Disk |

10.0.48.124 |

[Important: For using CDC in Bare Metal Docker Deployed, port 22 must be free. If you are running SSH, then you would need to change the default SSH port from 22 to any other random port. I have attached another document that has instructions on how to change the SSH port for those may need it as well.]

Introduction:

-Why use a Cloud Data Connector?

-The Cloud Data Connector (CDC) is a piece of software that can be deployed on Docker or deployed as an OVA in an ESXI server. It can be run either on-prem or in any cloud providers depending on your requirement.

- You could visualize CDC more like a middleman who receive some data from a “source” and transfer it to a “destination”.

For example: You can send data such as “Malicious Hits” from “CSP Portal” to a destination such an external Splunk Server. In order to do so, you would need to have CDC deployed that will receive data from CSP and CDC will send the received data to Splunk. In this case, the “source” is “CSP” and the “destination” is “Splunk” in a CDC’s perspective. A keynote here is that CDC acts as a medium to transfer data from CSP to an external Splunk server.

Below is a visual representation that shows the traffic flow. [Source (CSP) => CDC => Destination (Splunk Server).]

-In a CDC’s perspective, the source is “CSP Portal” and the destination to which this data is to be forwarded to is “Splunk”.

CDC Sources: In short, a “source” is where CDC can receive data from. For example: CDC can receive data from NIOS. So, here “NIOS” is considered to the “source”. In this guide, we will be using “CSP Portal” as the “Source” for CDC.

CDC Destinations: As the name suggests, a “destination” is where the data is to be forwarded to. In this guide, we will be sending data to an external splunk server. So, the “destination” is “Splunk”.

Section 1: How to setup and configure BloxOne CDC on Docker.

In this section, we will create an On-Prem Host and enable CDC application on it. The basic workflow that we are going to do is the following:

- First, we will create an On-Prem host in the CSP portal and enable CDC application.

- Make sure to change the SSH listening port to any other port other than 22. CDC requires port 22 to be free for it work properly.

-Login to https://csp.infoblox.com with your credentials.

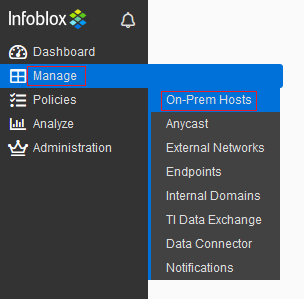

-Navigate to Manage>On-Prem Hosts.

-Click on “Create On-Prem Host”.

-Provide a name for your On-Prem host and enable Data Connector application as shown below. Once done, click on “Save & Close”.

- You would now receive a pop-up message with an API key. You would be needing this API key when we are deploying CDC on Docker in later sections of this tutorial so keep this handy.

-You can click on “Close” after you have saved the API key in your computer.

Note: You have the option to get the API key from the CSP UI later in case you forgot to copy it.

You should now see the On-Prem host that you created in the CSP UI in “Pending” state. The “Pending” status is expected as we have yet to load a VM with the BloxOne CDC image and join it to the CSP portal.

If you have got this far, you have successfully created an On-Prem Host in CSP and enabled CDC application on it. Now, we need a VM with the BloxOne docker image loaded to join it to the CSP portal.

Below are the instructions to get the docker image, load it to the docker host and register to the CSP portal.

-Click on “Administration”>” Downloads” in the CSP portal. This should take you to the various BloxOne downloads available.

-Here, we need the link for the docker image for BloxOne. Right click on “Download Package for Docker” and “Copy Link Location”.

The copied link in this example is:

http://ib-noa-prod.csp.infoblox.com.s3-website-us-east-1.amazonaws.com/BloxOne_OnPrem_Docker_3.2.7.tar.gz

Install Docker:

-Run the following commands to install docker on your Host. (You will need docker installed on both the VMS.)

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.sh

sudo usermod -aG docker $USER

Next change the SSH port from 22 to port 23. [Refer to the attached document on how to change the SSH port to another port.]

-After the SSH port has been changed, perform the following:

-Now that we copy the link to the BloxOne docker image which was taken from the CSP page. Now, we need to SSH into VM1 (refer to table in the beginning of the article), download the image. [The VM that needs to be running CDC should have SSH running on port 22. For ex: change SSH port on your VM to port 23 and restart SSH service.]

-Before setting up CDC, make sure your host Ubuntu machine is up to date and install “wget” utility. This utility will be used to download the BloxOne image. You can run the following command to upgrade and install “wget” utility.

sudo apt update && sudo apt upgrade -y && sudo apt install wget -y

Now, from VM1 run the following to download the BloxOne image.

cd ~/

wget http://ib-noa-prod.csp.infoblox.com.s3-website-us-east-1.amazonaws.com/BloxOne_OnPrem_Docker_3.2.7.tar.gz

Now, we have the BloxOne Docker image downloaded VM1. To load the docker image, you can run the following:

sudo docker load -i BloxOne_OnPrem_Docker_3.2.7.tar.gz

-To view the loaded image, you can use the following command:

sudo docker images

-Take a note of the image tag as we will need this later. Now, that the docker image is loaded to the host, we need to create the “blox.noa” container. Below is the syntax used to run the BloxOne application.

sudo docker run -d \

--name blox.noa \

--network=host \

-v /var/run/docker.sock:/var/run/docker.sock \

-v /var/lib/infoblox/certs:/var/lib/infoblox/certs \

-v /etc/onprem.d/:/etc/onprem.d/ \

infobloxcto/onprem.agent:<ENTER-IMAGE-TAG-HERE> \

--api.key <ENTER-API-KEY-HERE>

You can replace the text in Blue with the appropriate values as per your outputs. After it is done, you can check the status of the containers as shown below:

sudo docker ps -a

Below is a screenshot of the entire process.

[Important: When using the docker run command to create the container, please do not change the name of the container “blox.noa” as it may run into issues.]

-Now, blox.noa will register with the CSP portal and understand that this host needs to run the CDC application. So, it will download a few more docker images and run them. This usually takes about 5-10 minutes to download the images and run them.

-To view the list of docker images that were downloaded, you can run the following command:

sudo docker images

-Below is a screenshot from the local lab environment:

-Now, check the list of running containers on the host. You will see that there are multiple containers running on the host prefixed “cdc” which are needed to run CDC.

-Now, you should be able see that the CDC On-Prem host is “Online”. Below is a screenshot:

That’s Great! To summarize we did the following so far:

-Created an On-Prem Host in the CSP Portal and enabled CDC application.

-Logged in our VM, downloaded the BloxOne On-Prem images and loaded to the host.

-Created container “blox.noa” which downloaded additional images to run CDC application.

-Verified that the On-Prem host running CDC is “Online” in the CSP Portal.

Section 2: How to setup an external Splunk server on Docker.

In this section, we will setup Splunk Free edition on Docker on a different VM (VM2). This would be the VM to which CDC will be sending data to.

-SSH into VM2 (which will the VM that needs to run Splunk) and run the following commands:

sudo apt update && sudo apt upgrade -y

-Now, install docker on this VM/instance. (Instructions added in previous section of this guide.)

-Use the following to create a docker “bridge” network and run the following:

sudo docker network create --driver bridge --attachable splunk

sudo docker network ls

-Now, we will create the splunk server and the universal forwarder in docker. To do so, run the following docker commands:

sudo docker run --network splunk --name so1 --hostname so1 -p 8000:8000 -e "SPLUNK_PASSWORD=123splunklabA" -e "SPLUNK_START_ARGS=--accept-license" -it splunk/splunk:latest

-Once it is complete, you should see a message such as below:

-Press CTRL+P and CTRL+Q to escape out of the docker interactive mode so that we can create another container on the same host. (Alternatively, open another SSH session to the CDC VM/instance and continue the following.)

-To verify that the splunk container is up, you can run “docker ps -a” to validate.

-Now, we can run the following to create a Splunk Universal forwarder on the same instance.

sudo docker run --network splunk --name uf1 --hostname uf1 -p 9997:9997 -e "SPLUNK_PASSWORD=123splunklabA" -e "SPLUNK_START_ARGS=--accept-license" -e "SPLUNK_STANDALONE_URL=so1" -it splunk/universalforwarder:latest

Notes:

-Replicate the text in blue as per your requirement.

-Here, the password to login to the splunk UI is set as 123splunklabA. You can change it accordingly. Splunk has a password complexity requirement

-Once the container has been created you should see the following message “Ansible playbook complete.”

-Press CTRL+P and CTRL+Q to escape out of the docker interactive mode. (Alternatively, open another SSH session to the CDC VM/instance and continue the following.)

-To verify that the universal forwarder is up, you can run “docker ps -a” to validate.

-Here, we can see that both the universal forwarder and the Splunk server container are up and running.

-Now, I can access the UI for Splunk using the http://ip-of-box-running-splunk-server:8000

Username: admin

Password: 123splunklabA <- This is from the value of the variable passed when using the “docker run” command

-Click on “Got It” in the page.

-Click on “Settings”>” Forwarding and receiving”.

-Under “Receive data”> click on “Configure receiving”.

-Check if port 9997 is enabled.

Note: If there is no entry, then click on “New Receiving Port” and add port 9997 and make sure that it is enabled.

Now, we need to create an index in Splunk. To do so, click on Settings>Indexes.

-Click on “New Index”.

-Add your index name. In this lab, I have set the “Index Name” as “cdclab”.

-You should be able to see the newly added index in the “Indexes” section.

-Click on “Settings”> ”Source types”

-Click on “New Source Type”.

-Add the name as “ib:rpz:captures”

-This should be visible under “Source Types”.

Traffic Flow Configuration:

-Now that we have the Splunk server configured, we can do the configuration to make data flow into the Cloud Data Connector (CDC) from CSP and make CDC send to the external Splunk Server (destination).

CSP Portal => Cloud Data Connector => Splunk Server.

First, let’s create a Splunk destination.

Adding a Splunk Destination:

-Navigate to Manage>Data Connector>Destination Configuration and click on “Create”.

-Enter a name for your Splunk Destination. For now, keep this “State” as “Disabled”. This will be enabled after configuring the Traffic flow which is discussed later in guide.

-In this lab setup, the CDC VM/instance and the external Splunk server are in the same subnet. Hence, I have used the private IP “10.0.48.87” of the Splunk server. Use the port as “9997”. [Refer above sections of this guide.]

-The Indexer Name is “cdclab” which was configured in Splunk earlier. [Refer to above sections of this guide]

-As this is local lab for testing, I have used “Insecure Mode”.

-Now, we have configured a destination. Next, we will create a traffic flow.

Traffic Flow Configuration:

Here, we are configuring the flow configuration in such a way that CSP will send logs to CDC and CDC will send the received logs to the Splunk server (which is the destination which was configured above).

- Add a Name and Description for the traffic flow and keep the state as “Disabled” for now.

- Under “CDC Enabled Host”, make sure that the On-Prem host running CDC is selected.

- Select the “Source” as “BloxOne Cloud Source” and “Destination” as “CDC-to-Splunk”.

- The Log Type as the types of logs that needs to be send from CSP to the CDC application.

Summary so far: We configured a destination which is Splunk and configured a traffic flow for data.

Enabling Destination and traffic flow:

Now we need to enable the destination and the traffic flow.

-Navigate to Manage>Data Connector>Destination Configuration, edit Destination “CDC-to-Splunk”.

-Change “State” to “Enabled.

-Now, to enable to traffic flow perform the following:

-Navigate to Manage>Data Connector>Traffic Flow Configuration, edit Destination “CSP-to-CDC”.

-Change “State” to “Enabled.

Once the traffic flow is enabled, you should be able to see the “State” as “Active”.

Now, you can access the Splunk UI and search for the index “cdclab”.

index=”cdclab”

Below is a screenshot of the Splunk UI which is receiving data from CSP to Splunk via CDC.

Sources/References:

https://docs.infoblox.com/display/BloxOneDDI/Bare-Metal+Docker+Deployment

https://github.com/splunk/docker-splunk

https://docs.splunk.com/Documentation/Splunk/latest/Installation/SystemRequirements